Yann Thanwerdas’ PhD defense took place on Tuesday, 24th of May, 2022 at 13:00, in the Morgenstern amphitheatre (Kahn building) at Inria Université Côte d’Azur and streamed live on youtube at https://youtu.be/2-FRDJXRSZk.

Title

Riemannian and stratified geometries of covariance and correlation matrices

Keywords

Riemannian geometry, Covariance matrices, Correlation matrices, Families of metrics, Geodesics, Stratified spaces.

Committee

President – Rodolphe Sepulchre – Professor at University of Cambridge, UK

Reviewer – Pierre-Antoine Absil – Professor at Université de Louvain, Belgium

Reviewer – Marc Arnaudon – Professor at Université de Bordeaux, France

Examiner – Rajendra Bhatia – Professor at Ashoka University, Sonipat, India

Examiner – Minh Ha Quang – Associate Professor at RIKEN Center for AIP, Tokyo, Japan

Examiner – Huiling Le – Emeritus Professor at University of Nottingham, UK

Examiner – Frank Nielsen – Fellow at Sony CSL, Tokyo, Japan

Advisor – Xavier Pennec – Research Director at Inria, Sophia Antipolis, France

Abstract

In many applications, the data can be represented by covariance matrices or correlation matrices between several signals (EEG, MEG, fMRI), physical quantities (cells, genes), or within a time window (autocorrelation). The set of covariance matrices forms a convex cone that is not a Euclidean space but a stratified space: it has a boundary which is itself a stratified space of lower dimension. The strata are the manifolds of covariance matrices of fixed rank and the main stratum of Symmetric Positive Definite (SPD) matrices is dense in the total space. The set of correlation matrices can be described similarly.

Geometric concepts such as geodesics, parallel transport, Fréchet mean were proposed for generalizing classical computations (interpolation, extrapolation, registration) and statistical analyses (mean, principal component analysis, classification, regression) to these non-linear spaces. However, these generalizations rely on the choice of a geometry, that is a basic operator such as a distance, an affine connection, a Riemannian metric, a divergence, which is assumed to be known beforehand. But in practice there is often not a unique natural geometry that satisfies the application constraints. Thus, one should explore more general families of geometries that exploit the data properties.

First, the geometry must match the problem. For instance, degenerate matrices must be rejected to infinity whenever covariance matrices must be non-degenerate. Second, we should identify the invariance of the data under natural group transformations: if scaling each variable independently has no impact, then one needs a metric invariant under the positive diagonal group, for instance a product metric that decouples scales and correlations. Third, good numerical properties (closed-form formulae, efficient algorithms) are essential to use the geometry in practice.

In my thesis, I study geometries on covariance and correlation matrices following these principles. In particular, I provide the associated geometric operations which are the building blocks for computing with such matrices.

On SPD matrices, by analogy with the characterization of affine-invariant metrics, I characterize the continuous metrics invariant by $\Orth(n)$ by means of three multivariate continuous functions. Thus, I build a classification of metrics: the constraints imposed on these functions define nested classes satisfying stability properties. In particular, I reinterpret the class of kernel metrics, I introduce the family of mixed-Euclidean metrics for which I compute the curvature, and I survey and complete the knowledge on the classical metrics (log-Euclidean, Bures-Wasserstein, BKM, power-Euclidean).

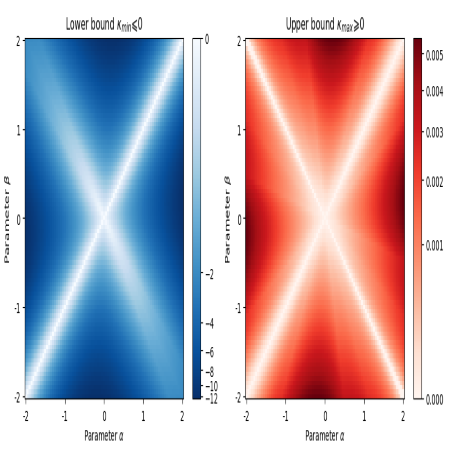

On full-rank correlation matrices, I compute the Riemannian operations of the quotient-affine metric. Despite its appealing construction and its invariance under permutations, I show that its curvature is of non-constant sign and unbounded from above, which makes this geometry practically very complex. I introduce computationally more convenient Hadamard or even log-Euclidean metrics, along with their geometric operations. To recover the lost invariance under permutations, I define two new permutation-invariant log-Euclidean metrics, one of them being invariant under a natural involution on full-rank correlation matrices. I also provide an efficient algorithm to compute the associated geometric operations based on the scaling of SPD matrices.

Finally, I study the stratified Riemannian structure of the Bures-Wasserstein distance on covariance matrices. I compute the domain of definition of geodesics and the injection domain within each stratum and I characterize the length-minimizing curves between all the strata.